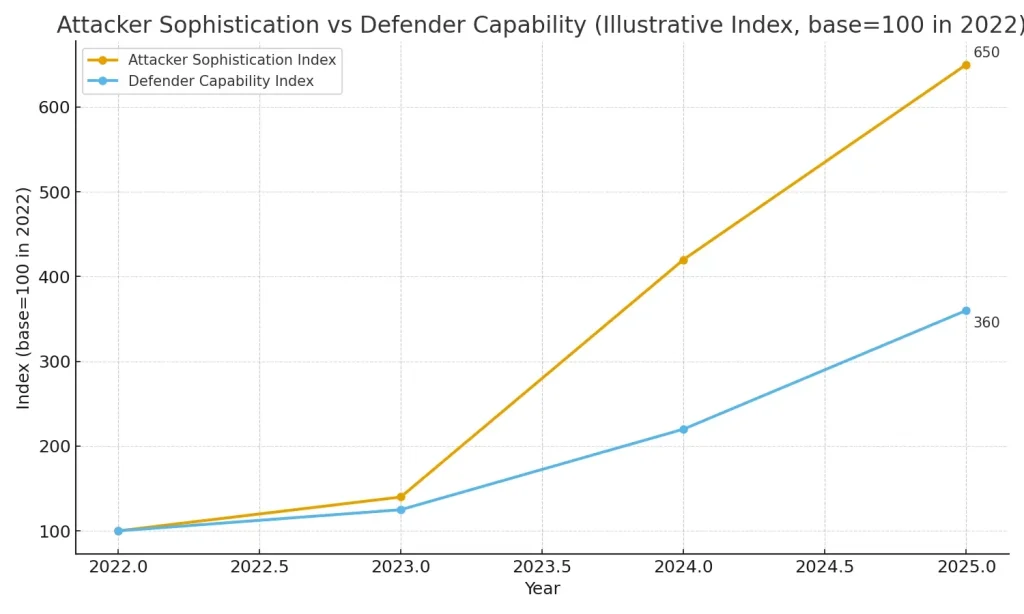

The cybersecurity landscape of 2025 has become an arms race where artificial intelligence serves as both weapon and shield. As organizations scramble to defend their digital assets, they face an uncomfortable reality: the same AI technology powering their defense systems is being weaponized by cybercriminals to launch unprecedented attacks.

The Dark Side: How AI Supercharges Cyber Attacks

The Phishing Revolution

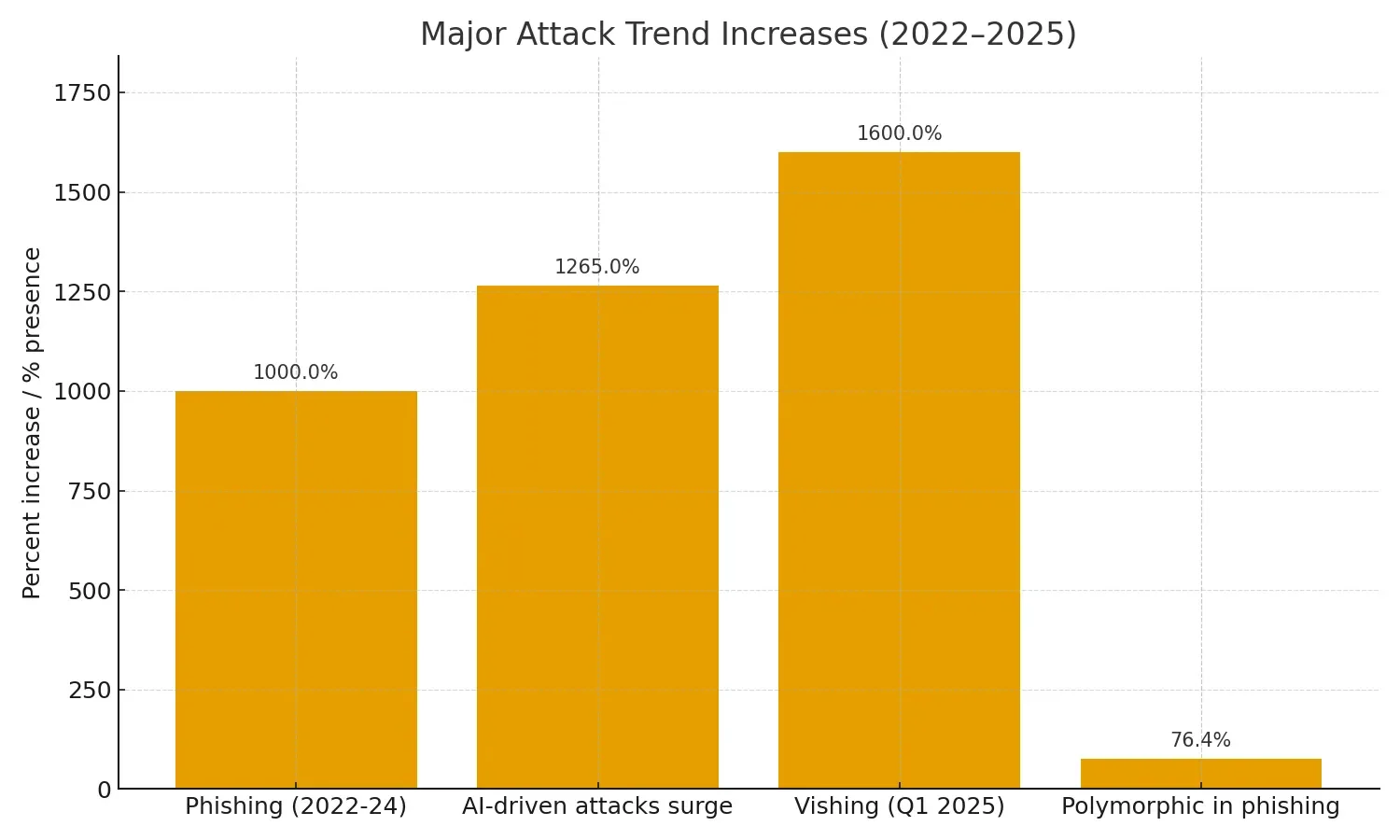

Phishing attacks have exploded by 1,000% between 2022 and 2024, with AI-generated cyberattacks now ranking as the most feared threat among IT professionals and cybersecurity experts. What makes these attacks particularly dangerous is their sophistication and scale.

Recent research demonstrates that AI spear phishing agents have improved 55% relative to human red teams from 2023 to February 2025, and by March 2025, AI was 24% more effective than humans at crafting phishing attacks. These aren’t theoretical concerns—they’re daily realities.

How Cybercriminals Leverage AI:

- Hyper-Personalization at Scale: Attackers use tools like ChatGPT and DeepSeek to create phishing emails, generate audio and video for vishing attacks, and even create fake domains to gain credentials. The AI analyzes social media profiles, LinkedIn data, and corporate materials to craft messages that perfectly mimic colleagues or trusted partners.

- Perfect Grammar and Context: Gone are the days when spelling errors flagged phishing attempts. AI-generated phishing emails are polished, personalized, and contextually accurate, leading to higher success rates compared to human-written attempts.

- Polymorphic Malware: AI-generated polymorphic malware can create a new, unique version of itself as frequently as every 15 seconds during an attack, with polymorphic tactics now present in an estimated 76.4% of all phishing campaigns. This shape-shifting ability renders traditional signature-based antivirus tools nearly useless.

- Deepfake Deception: Voice cloning has become a preferred method for AI-enabled cybercrime, with one in 10 adults globally experiencing an AI voice scam, and 77% of those victims losing money. In one dramatic case, a Hong Kong finance firm lost $25 million to a deepfake scam involving AI-generated video of the company’s CFO.

The Economics of AI Crime:

Perhaps most concerning is the democratization of these tools. What were once costly AI-driven phishing tools are now available for as little as $50 per week, putting sophisticated attack capabilities in the hands of low-skill criminals.

| Metric | Value / Note |

|---|---|

| Phishing growth (2022–2024) | ~1,000% increase |

| AI-driven attacks surge (reported) | ~1,265% increase (observational) |

| Vishing spike (Q1 2025) | ~1,600% increase |

| Polymorphic techniques present in phishing | ~76.4% of phishing campaigns (estimate) |

| Deepfake fraud victims (example) | High-impact cases (e.g., $25M loss reported in a deepfake CFO scam) |

The Bright Side: AI-Powered Defense Systems

While attackers wield AI as a weapon, security teams are fighting back with equally sophisticated AI-powered defenses that are transforming threat detection and response.

Real-Time Threat Detection Revolution

AI leverages machine learning algorithms to analyze vast amounts of data and identify patterns that signal potential threats, moving beyond static rules and signatures to detect both known threats and previously unseen attacks by identifying anomalies or suspicious patterns.

How AI Enhances Defense:

- Behavioral Baseline Learning: Darktrace’s Enterprise Immune System mimics the human immune system by learning the normal behavior of a network, and when it detects anomalies that deviate from this norm, it can identify potential threats even those that have never been seen before.

- Lightning-Fast Response: IBM’s Watson for Cybersecurity uses natural language processing to read and understand vast amounts of security data, and when it identifies a threat, it can suggest or even implement responses automatically, such as quarantining suspicious emails before they reach inboxes.

- Predictive Analytics: AI-powered threat intelligence platforms continuously ingest and analyze immense volumes of data from a wide range of sources, enabling organizations to forecast potential vulnerabilities and attack strategies before attacks occur.

- Reduced False Positives: CrowdStrike’s Falcon platform uses AI to improve threat detection accuracy by analyzing behavior patterns and correlating data from various sources, distinguishing between legitimate activities and actual threats to reduce false positives.

Enterprise Leaders: Splunk and JPMorgan Chase

Splunk: Revolutionizing the Security Operations Center

Splunk has positioned itself at the forefront of AI-powered security operations, transforming how organizations detect and respond to threats.

Agentic AI for SecOps:

In September 2025, Cisco introduced Splunk Enterprise Security Essentials Edition and Premier Edition, providing customers agentic AI-powered SecOps options that unify security workflows across threat detection, investigation, and response. These aren’t just incremental improvements—they represent a fundamental shift in how security operations function.

According to Mike Horn, Splunk’s SVP and GM for Splunk Security, built-in AI can help cut alert noise and reduce investigation time from hours to minutes, positioning every SOC to stay ahead of advanced threats and empowering analysts at every level.

Key Capabilities:

- UEBA (User and Entity Behavior Analytics): Splunk’s enhanced UEBA capability continuously baselines and analyzes user, device, and entity behaviors, using machine learning models that adapt dynamically to uncover hidden risks and reduce alert fatigue.

- AI-Powered Triage: The Triage Agent uses AI to evaluate and prioritize alerts, reducing analyst workload and drawing attention to the most critical issues.

- Real-World Impact: Organizations using AI and automation report 59% moderately or significantly boosted efficiency, with 78% citing faster incident detection and 66% noting quicker remediation as moderate to transformative benefits.

| Attack Technique | Role / Why it works | Illustrative share |

|---|---|---|

| Hyper-personalized Phishing | Uses social profiling and AI text generation to craft highly convincing messages | ~55% |

| Polymorphic Malware | Constantly mutates to bypass signature-based defenses | ~20% |

| Deepfake / Vishing | Voice/video cloning for fraudulent authorizations and social engineering | ~15% |

| Quishing (QR) / Mobile | Exploits mobile behaviors and QR trust; bypasses some email filters | ~6% |

| Other (supply-chain, credential stuffing) | Various automated attack vectors supporting larger campaigns | ~4% |

JPMorgan Chase: Banking on AI Security

As the world’s largest bank by market cap, JPMorgan Chase has made cybersecurity and AI a strategic priority at the highest levels of the organization.

Executive-Level Commitment:

JPMorgan Chase CEO Jamie Dimon states that AI shouldn’t be a part of the technology organization since it impacts all of the business, and the head of AI is at every single meeting he has with management teams. This isn’t just lip service—the bank has an $18 billion IT budget and is spending $2 billion specifically on AI initiatives.

Innovative Security Solutions:

JPMorgan Chase built the AI Threat Modeling Co-Pilot (AITMC), a solution that helps its engineers better model threats earlier and more efficiently in the software development lifecycle. The results speak for themselves: AITMC has driven 20% efficiency in the threat modeling process and uncovered an average of nine additional novel threats per model created.

Strategic Investment:

JPMorgan Chase unveiled a 10-year, $1.5 trillion Security and Resiliency Initiative, with up to $10 billion in direct equity investments, focusing on four key areas including frontier and strategic technologies covering artificial intelligence, cybersecurity, and quantum computing.

Dimon has acknowledged that cybersecurity is “the thing that scares me the most,” noting that people are now using agents to try to penetrate major companies and that the bad guys are already using AI and agents.

Practical Tips: Leveraging AI-Powered Security Tools

Based on industry best practices and lessons from leading organizations, here are actionable strategies for implementing AI-powered security:

1. Build a Layered AI Defense Strategy

Integrate Multiple AI Tools:

- Deploy AI-powered endpoint detection (like CrowdStrike Falcon or SentinelOne)

- Implement behavioral analytics (UEBA) for insider threat detection

- Use AI-enhanced SIEM solutions for centralized threat intelligence

- Add AI-driven email security to combat phishing

2. Implement Zero Trust Architecture

Cyber threats in 2025 evolve too rapidly for manual monitoring to keep up, and AI-driven platforms offer real-time visibility, anomaly detection, and automated responses to threats that traditional systems might miss. Combine this with Zero Trust principles that verify every access request, regardless of source.

3. Prioritize Continuous Monitoring and Testing

Best Practices:

- Enable 24/7 AI-powered monitoring across all endpoints

- Regularly scan AI models and applications to proactively identify vulnerabilities, including container security, dependencies, fuzz testing, and AI-specific scans

- Conduct regular penetration testing that includes AI-specific attack scenarios

- Perform continuous threat hunting using AI to identify advanced persistent threats

4. Invest in Security Automation

Key Actions:

- Deploy Security Orchestration, Automation, and Response (SOAR) platforms

- Automate routine tasks like log analysis and vulnerability scanning

- Use AI to prioritize alerts based on risk severity

- Implement automated incident response playbooks for common threats

5. Address the Human Element

Critical Steps:

- Conduct regular phishing simulations using AI-generated scenarios

- Train employees to recognize AI-enhanced social engineering

- Implement a human-in-the-loop approach to ensure AI-driven decisions are reviewed before they impact operations, preventing blind reliance on automated decisions

- Enable multi-factor authentication (MFA) everywhere to add defense layers

6. Maintain Visibility and Control

Essential Components:

- Create an AI bill of materials (AI-BOM) to track all AI components

- Implement centralized policy management across cloud environments

- Use security tools that can map controls to specific regulatory requirements to simplify compliance demonstration

- Monitor for “shadow AI”—unauthorized AI tools employees might be using

7. Build an Agile Security Framework

Agile security frameworks adapt to AI’s rapid evolution while providing immediate protection, using rapid initial deployment to create foundational AI security quickly, iterative refinement through short update cycles, and priority-based evolution to address critical risks first.

8. Choose the Right AI Security Tools

Evaluation Criteria:

- Real-time threat detection and response capabilities

- Integration with existing security infrastructure

- Scalability across dynamic cloud environments

- Vendor track record and support quality

- Compliance with industry standards and regulations

9. Secure Your AI Supply Chain

Most businesses rely on third-party models, APIs, and open-source libraries, creating supply chain risk where compromised dependencies or malicious code can introduce vulnerabilities. Vet all AI components thoroughly and maintain an updated inventory.

10. Stay Current and Adaptable

Ongoing Commitments:

- Subscribe to threat intelligence feeds from trusted sources

- Participate in industry information-sharing communities

- Regularly update AI models with new threat data

- Review and update security policies quarterly

- Conduct annual security audits focusing on AI-specific risks

| Layer | Tools / Tactics | Why it matters |

|---|---|---|

| Email Security | AI-based filtering, sandboxing, link rewriting, DMARC/SPF/DKIM | Stops spear-phishing and malicious attachments before delivery |

| Endpoint Protection | EDR with ML (CrowdStrike, SentinelOne), behavioral blocking | Detects lateral movement and polymorphic malware behavior |

| UEBA / SIEM | UEBA, SIEM with ML triage (Splunk, Elastic) | Baselines behavior and reduces alert fatigue |

| SOAR & Automation | Automated playbooks for containment, sandbox analysis | Speeds containment and reduces manual gaps |

| Authentication | FIDO2 hardware keys, phishing-resistant MFA | Prevents credential theft impact even after successful phishing |

| Training & Culture | AI-driven realistic simulations, no-blame reporting policy | Reduces click rate and encourages swift reporting |

| AI-Governance | AI-BOM, approved tools list, shadow-AI monitoring | Prevents data leakage and unmanaged AI risks |

The Bottom Line

The AI revolution in cybersecurity is not coming—it’s already here. The AI arms race of 2025 has created a new reality where the baseline for attacks has been permanently elevated, with human-centric threats like deepfake fraud and hyper-realistic phishing now mainstream tactics.

Organizations face a clear choice: embrace AI-powered security solutions proactively or become victims of AI-powered attacks. The good news is that AI offers defenders powerful capabilities to detect, prevent, and respond to threats at machine speed. The key is implementing a comprehensive strategy that combines the right tools, processes, and people.

Surviving in this landscape requires a strategic pivot to a proactive posture built on a non-negotiable foundation of Zero Trust and validated through continuous testing. Those who act now will be positioned to thrive in this new era of AI-enhanced cybersecurity. Those who delay risk becoming cautionary tales.

The battlefield has evolved, and AI is the weapon that determines victory or defeat. The question isn’t whether to adopt AI-powered security—it’s how quickly you can deploy it effectively.